We Are Not Saved

If Anyone Builds It, Everyone Dies - Yudkowsky at his Yudkowskiest

- Author: Vários

- Narrator: Vários

- Publisher: Podcast

- Duration: 0:10:07

- More information

Informações:

Synopsis

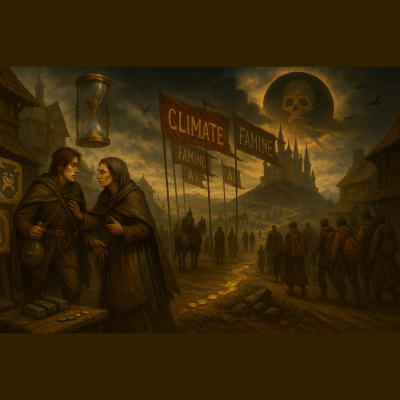

Don’t hold back guys, tell us how you really feel. If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All By: Eliezer Yudkowsky and Nate Soares Published: 2025 272 Pages Briefly, what is this book about? This book makes the AI doomer case at its most extreme. It asserts that if we build artificial superintelligence (ASI) then that ASI will certainly kill all of humanity. Their argument in brief: the ASI will have goals. These goals are very unlikely to be in alignment with humanity’s goals. This will bring humanity and the ASI into conflict over resources. Since the ASI will surpass us in every respect it will have no reason to negotiate with us. Its superhuman abilities will also leave us unable to stop it. Taken together this will leave the ASI with no reason to keep us around and many reasons to eliminate us—thus the “Everyone Dies” part of the title. What's the author's angle? Yudkowsky is the ultimate AI doomer. No one is more vocally worried about misaligned ASI than he. Soares is Rob